Graphics Processing Units (GPUs) have undergone a remarkable evolution since their inception, transforming from simple graphics accelerators to essential components in modern computing. Initially designed to render images and graphics for video games, GPUs now play a critical role in various fields, including artificial intelligence (AI), machine learning, and scientific simulations. This article delves into the history of GPUs, their functionalities, and their increasing significance in contemporary technology.

The Birth of GPUs

The concept of a dedicated graphics processor began in the early 1980s when computer graphics were primarily handled by the CPU. The need for faster rendering of images and animations led to the development of specialized chips. In 1999, NVIDIA introduced the GeForce 256, widely recognized as the first true GPU. It featured hardware-accelerated transform and lighting, allowing for more complex and realistic graphics rendering. This marked a significant shift in the way graphics were processed and laid the groundwork for the evolution of modern GPUs.

Advancements in Graphics Technology

As technology progressed, the capabilities of GPUs expanded dramatically. The introduction of programmable shaders in the early 2000s allowed developers to create more intricate and visually stunning graphics. This innovation enabled real-time rendering of complex visual effects, such as dynamic lighting and shadows, enhancing the overall gaming experience.

By the mid-2000s, GPUs had evolved to support parallel processing, enabling them to perform multiple calculations simultaneously. This made them not only suitable for graphics rendering but also for general-purpose computing tasks, a concept known as GPGPU (General-Purpose computing on Graphics Processing Units). This paved the way for GPUs to be utilized in fields beyond gaming, such as scientific research, financial modeling, and data analysis.

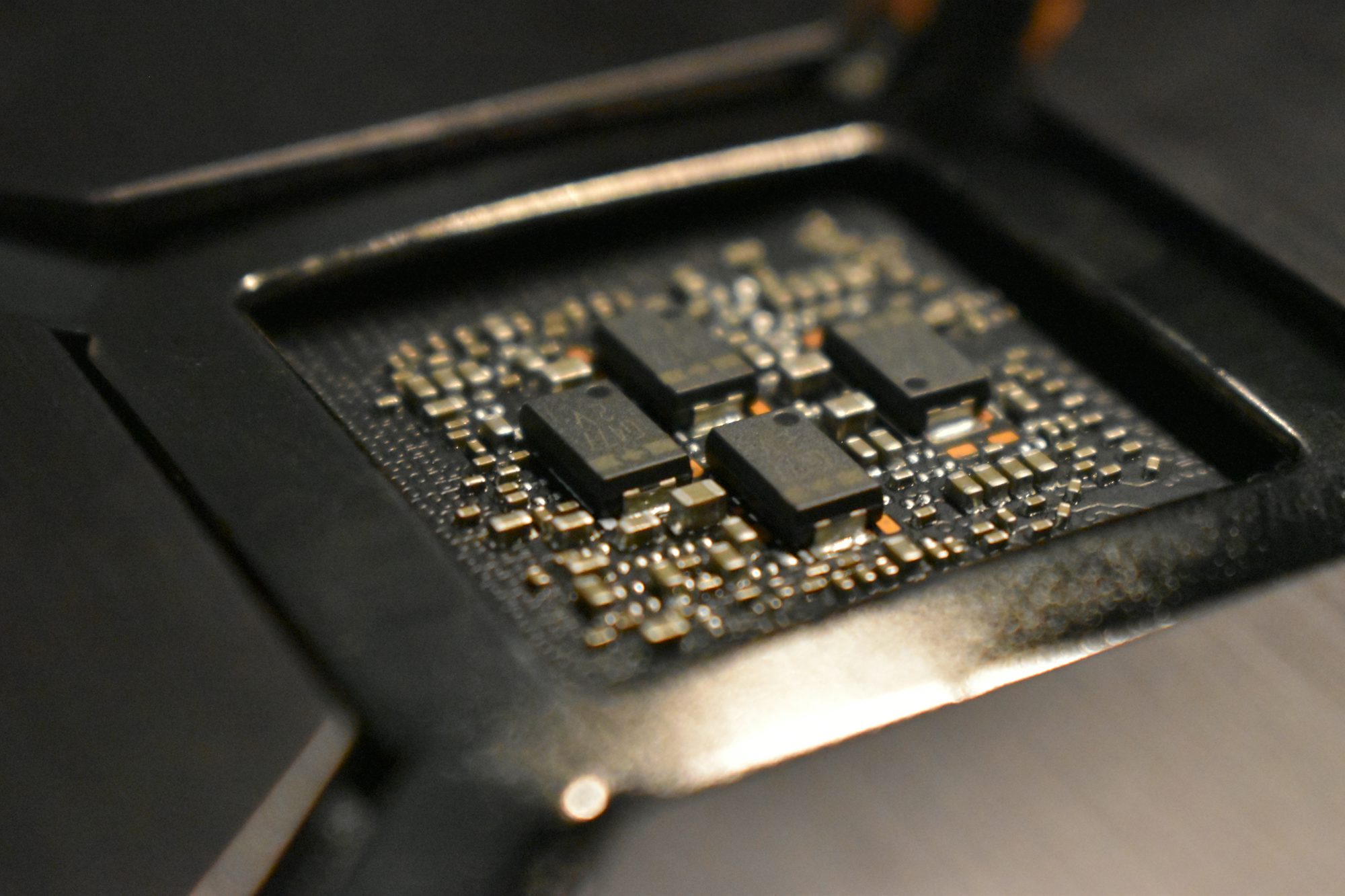

How GPUs Work

GPUs are designed to handle complex calculations required for rendering graphics. They consist of thousands of small processing cores that work in parallel, allowing them to perform numerous calculations at once. This parallel architecture is what makes GPUs exceptionally powerful for tasks that can be divided into smaller, simultaneous operations.

Rendering Graphics

When rendering an image, the GPU processes data in several stages, including vertex shading, geometry shading, rasterization, and fragment shading. Each stage performs specific tasks, such as transforming 3D coordinates into 2D coordinates, determining visibility, and applying textures and colors. This multi-stage process enables GPUs to create highly detailed and realistic images efficiently.

Beyond Graphics: The Rise of AI and Machine Learning

In recent years, the role of GPUs has expanded significantly due to the rise of artificial intelligence and machine learning. Training machine learning models often requires processing vast amounts of data and performing complex mathematical calculations, which are well-suited for GPU architecture. Many AI frameworks, such as TensorFlow and PyTorch, have been optimized to leverage GPU capabilities, leading to faster training times and improved performance.

GPUs have become the backbone of deep learning, enabling researchers to build and train neural networks more efficiently. This has resulted in significant advancements in fields such as natural language processing, image recognition, and autonomous systems. The ability to process large datasets in parallel allows for rapid experimentation and development, driving innovation in AI.

The Growing Importance of GPUs in Various Industries

1. Gaming

The gaming industry remains one of the primary drivers of GPU development. Gamers demand high-performance graphics and smooth frame rates, leading to continuous advancements in GPU technology. Modern GPUs support real-time ray tracing, providing more realistic lighting and reflections in games. As gaming technology evolves, GPUs will continue to play a crucial role in delivering immersive experiences.

2. Data Science and Analytics

In the realm of data science, GPUs have become invaluable tools for processing large datasets and performing complex analyses. Organizations leverage GPUs to accelerate data processing tasks, from data cleaning to predictive modeling. The ability to analyze data in real time enhances decision-making and fosters data-driven insights.

3. Scientific Research

Researchers in fields such as genomics, climate modeling, and physics have turned to GPUs to perform simulations and calculations that would be infeasible with traditional computing methods. The parallel processing capabilities of GPUs enable scientists to run complex simulations faster, leading to new discoveries and insights.

4. Cryptocurrency Mining

The cryptocurrency boom has also influenced the demand for GPUs. Cryptocurrency mining requires significant computational power to solve complex mathematical problems. Miners have turned to high-performance GPUs to maximize their mining capabilities, leading to increased demand in the market.

Future Trends in GPU Technology

As technology continues to advance, the future of GPUs holds exciting possibilities:

1. Integration with AI

The integration of AI capabilities within GPUs is expected to deepen. Companies are developing specialized GPUs tailored for AI workloads, enabling more efficient training and inference. This trend will likely lead to further advancements in AI applications across various industries.

2. Energy Efficiency

As the demand for GPUs increases, so does the need for energy-efficient solutions. Future GPUs will focus on reducing power consumption while maintaining high performance. Innovations in cooling technologies and architecture designs will be crucial in achieving this balance.

3. Cloud Computing

The rise of cloud computing services has changed how GPUs are accessed and utilized. Companies can now leverage cloud-based GPU resources for scalable computing power without the need for significant upfront investments. This trend will likely continue, enabling organizations to access powerful GPU capabilities on demand.

4. Next-Generation Architectures

New architectures, such as NVIDIA’s Ampere and AMD’s RDNA, are pushing the boundaries of GPU performance. These architectures aim to improve processing power, memory bandwidth, and overall efficiency, setting the stage for future advancements in gaming and AI.

Conclusion

Graphics Processing Units have come a long way since their inception, evolving into powerful tools that extend far beyond gaming. Their ability to handle parallel processing has made them indispensable in fields such as artificial intelligence, scientific research, and data analytics. As technology continues to advance, GPUs will play an increasingly vital role in shaping the future of computing. By harnessing their capabilities, industries can unlock new possibilities, driving innovation and enhancing productivity across various sectors. The journey of GPUs is far from over, and their significance in the technological landscape will only continue to grow.